Kelly Warner Law Firm Blames USA Herald for Arizona Bar Investigation

In what appears as a desperate attempt to defend multiple allegations of fraud on the courts, the Kelly Warner Law…

By – USA HeraldAaron Kelly Law Firm Resorts To Attacking Former Client Again On KellyWarnerLaw.com – Pattern Recognized

Attorney Aaron Kelly and his law partner Daniel Warner are currently under investigation by the Arizona Bar for legal misconduct.…

By – Jeff WattersonArizona Bar Opens Investigation on Attorney Aaron Kelly

USA Herald recently reported on a developing story involving Attorneys Daniel Warner and Aaron Kelly. Both Warner and Kelly have…

By – Paul O'NealDiddy Set To Be Released Early According To Federal Bureau of Prisons

Key Takeaways Sean “Diddy” Combs is now tentatively scheduled for release on April 25, 2028, three months earlier than previously…

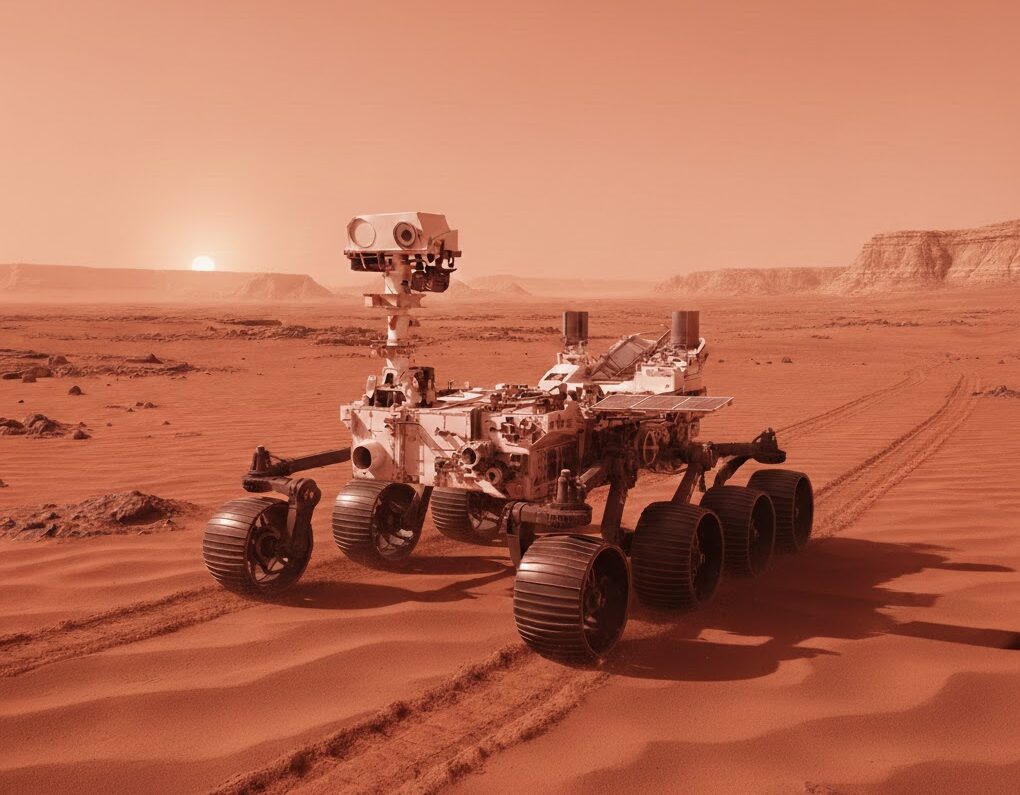

By – Samuel LopezPlanetary Defense Was Never Just About 3I/ATLAS — It Was About Readiness

[USA HERALD] – A few months ago, when 3I/ATLAS was lighting up the headlines and dominating the algorithm, the phrase “planetary defense”…

By – Samuel LopezJustin Timberlake Moves To Block Release of DWI Bodycam Footage In Sag Harbor

New York – A high-profile arrest in a wealthy Long Island village. A Freedom of Information request filed by members…

By – Samuel LopezOpenAI Changes Deal With US Military After Backlash and Surveillance Fears

In a swift reversal that underscores the volatile intersection of Silicon Valley and the battlefield, OpenAI changes deal with US…

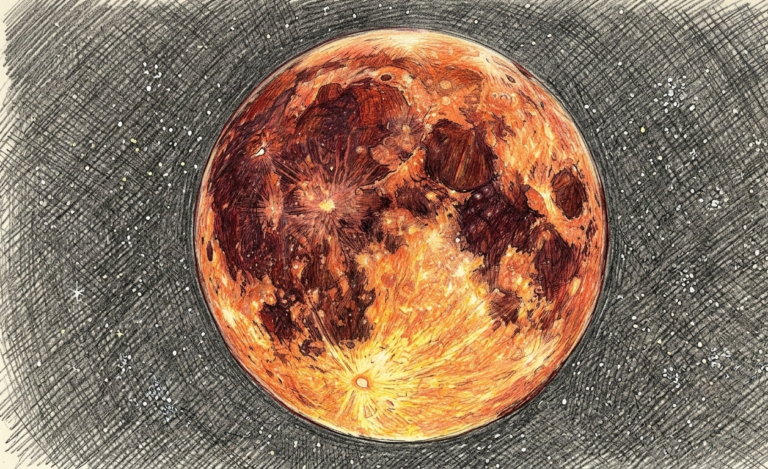

By – Rihem Akkouche‘Blood Moon’ Eclipse Tonight Dazzles Skywatchers Worldwide

The countdown is nearly over for the ‘Blood Moon’ Eclipse Tonight, a celestial spectacle set to stain the night sky…

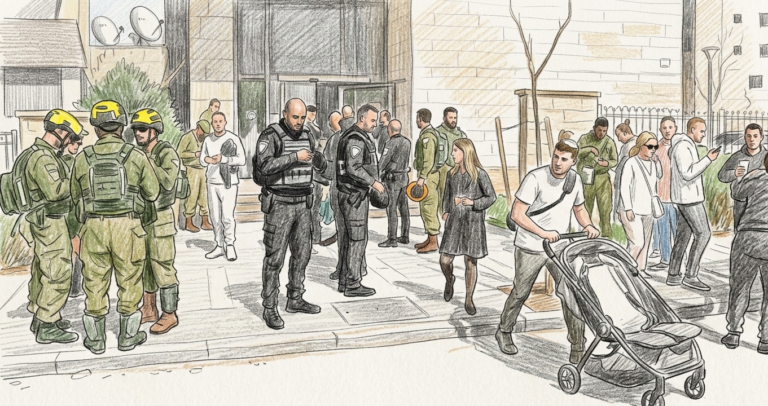

By – Rihem AkkoucheDEPART NOW : US Citizens Urged to Leave Middle Eastern Countries as Conflict Spirals

The warning came blunt and urgent: US citizens urged to leave Middle Eastern countries as violence surges and the region…

By – Rihem AkkoucheDiddy Set To Be Released Early According To Federal Bureau of Prisons

Key Takeaways Sean “Diddy” Combs is now tentatively scheduled for release on April 25, 2028, three months earlier than previously…

By – Samuel LopezPlanetary Defense Was Never Just About 3I/ATLAS — It Was About Readiness

[USA HERALD] – A few months ago, when 3I/ATLAS was lighting up the headlines and dominating the algorithm, the phrase “planetary defense”…

By – Samuel LopezJustin Timberlake Moves To Block Release of DWI Bodycam Footage In Sag Harbor

New York – A high-profile arrest in a wealthy Long Island village. A Freedom of Information request filed by members…

By – Samuel LopezOpenAI Changes Deal With US Military After Backlash and Surveillance Fears

In a swift reversal that underscores the volatile intersection of Silicon Valley and the battlefield, OpenAI changes deal with US…

By – Rihem Akkouche‘Blood Moon’ Eclipse Tonight Dazzles Skywatchers Worldwide

The countdown is nearly over for the ‘Blood Moon’ Eclipse Tonight, a celestial spectacle set to stain the night sky…

By – Rihem AkkoucheDEPART NOW : US Citizens Urged to Leave Middle Eastern Countries as Conflict Spirals

The warning came blunt and urgent: US citizens urged to leave Middle Eastern countries as violence surges and the region…

By – Rihem AkkoucheOpenAI Changes Deal With US Military After Backlash and Surveillance Fears

In a swift reversal that underscores the volatile intersection of Silicon Valley and the battlefield, OpenAI changes deal with US…

By – Rihem Akkouche‘Blood Moon’ Eclipse Tonight Dazzles Skywatchers Worldwide

The countdown is nearly over for the ‘Blood Moon’ Eclipse Tonight, a celestial spectacle set to stain the night sky…

By – Rihem AkkoucheDEPART NOW : US Citizens Urged to Leave Middle Eastern Countries as Conflict Spirals

The warning came blunt and urgent: US citizens urged to leave Middle Eastern countries as violence surges and the region…

By – Rihem AkkoucheUS Embassy in Saudi Arabia Struck as Iran Widens Conflict

The US embassy in Saudi Arabia struck — a dramatic escalation in a conflict that is now ricocheting across the…

By – Rihem AkkoucheEscalating Middle East War: Drone Strikes Hit U.S. Embassy in Saudi Arabia As Hundreds Reported Dead in Iran

The Middle East is facing a rapidly expanding military conflict after drone strikes targeted the U.S. diplomatic compound in Riyadh…

By – Tyler BrooksMichigan Woman Wins Record $822K Lottery Jackpot After Pizza Lunch Stop

A simple lunch break stop for pizza turned into a life-changing moment for a Michigan woman who won the largest…

By – Ahmed BoughallebNancy Guthrie Investigation Continues as Person of Interest Released and Home Searched in Rio Rico

Authorities in Arizona are intensifying their search for Nancy Guthrie, 84, the mother of “Today” co-host Savannah Guthrie, who has…

By – Ahmed BoughallebWhat is Aegosexuality?

As conversations around sexuality continue to expand, so does the language we use to describe it. Sexuality terms are gaining…

By – Jackie AllenDonald Trump Responds as Iranian Protesters Gain Ground in Unprecedented Nationwide Uprising

Iran’s Supreme Leader Ali Khamenei is openly blaming Donald Trump as nationwide protests continue to expand and intensify. And Donald…

By – Jackie AllenSavannah Guthrie’s Mom Missing in Arizona: Search Intensifies as FBI Joins Investigation

The desperate search for Savannah Guthrie’s mom continues in Arizona, with authorities entering the fourth day of a widening investigation…

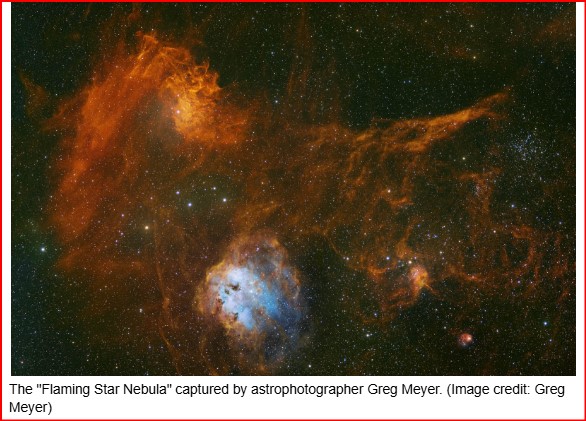

By – Jackie AllenFlaming Star Nebula: A Runaway Star Sets the Cosmos Aglow

Astrophotographer Greg Meyer has unveiled a breathtaking portrait of the Flaming Star Nebula, where the brilliant blue star AE Aurigae…

By – Jackie AllenLinked to China: Google Engineer Linwei Ding Convicted in Landmark AI Espionage Case

A federal jury has convicted former Google software engineer Linwei Ding of stealing a trove of the company’s most sensitive…

By – Jackie AllenDiddy Set To Be Released Early According To Federal Bureau of Prisons

Key Takeaways Sean “Diddy” Combs is now tentatively scheduled for release on April 25, 2028, three months earlier than previously…

By – Samuel LopezPlanetary Defense Was Never Just About 3I/ATLAS — It Was About Readiness

[USA HERALD] – A few months ago, when 3I/ATLAS was lighting up the headlines and dominating the algorithm, the phrase “planetary defense”…

By – Samuel LopezJustin Timberlake Moves To Block Release of DWI Bodycam Footage In Sag Harbor

New York – A high-profile arrest in a wealthy Long Island village. A Freedom of Information request filed by members…

By – Samuel LopezOpenAI Changes Deal With US Military After Backlash and Surveillance Fears

In a swift reversal that underscores the volatile intersection of Silicon Valley and the battlefield, OpenAI changes deal with US…

By – Rihem Akkouche‘Blood Moon’ Eclipse Tonight Dazzles Skywatchers Worldwide

The countdown is nearly over for the ‘Blood Moon’ Eclipse Tonight, a celestial spectacle set to stain the night sky…

By – Rihem AkkoucheDEPART NOW : US Citizens Urged to Leave Middle Eastern Countries as Conflict Spirals

The warning came blunt and urgent: US citizens urged to leave Middle Eastern countries as violence surges and the region…

By – Rihem AkkoucheMike Tyson Urges Americans to ‘Eat Real Food’ in Emotional Super Bowl Ad Highlighting Health Risks

Boxing legend Mike Tyson is using his platform ahead of Super Bowl 60 to address a personal and national health…

By – Tyler BrooksDeadly “Death Cap” Mushrooms in California Cause Multiple Deaths and Liver Transplants Amid Rare Super Bloom

California health officials are warning the public after four deaths and three liver transplants linked to the highly toxic death…

By – Ahmed BoughallebFrom Migraines to Miracles: How Becca Valle Survived a Glioblastoma Diagnosis Against the Odds

Becca Valle, 41, thought her headaches were just migraines—until a sudden, unbearable pain revealed something far more serious. In September…

By – Tyler BrooksNew York Approves Medical Aid in Dying for Terminally Ill Patients

New York Governor Kathy Hochul on Friday signed a law allowing terminally ill residents with less than six months to…

By – Tyler BrooksCalifornia Jury Awards $25 Million to Man Who Developed Lung Disease Linked to PAM Butter-Flavored Cooking Spray

A California civil jury has awarded $25 million to Ronald Esparza after finding Conagra Brands liable for causing his debilitating…

By – Tyler BrooksMedtronic Antitrust Lawsuit Update: Jury Weighs $381M Claim in Applied Medical Device Dispute

The legal fight between Medtronic and Applied Medical has entered a critical phase, with jurors now weighing whether the medical…

By – Ahmed BoughallebWhat is Aegosexuality?

As conversations around sexuality continue to expand, so does the language we use to describe it. Sexuality terms are gaining…

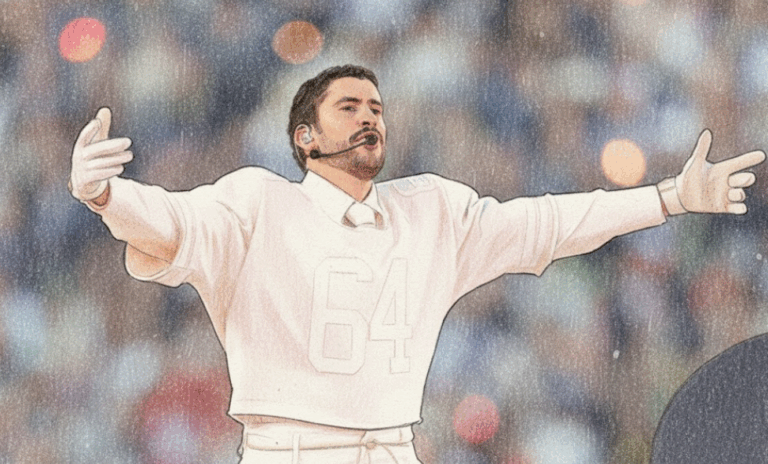

By – Jackie AllenBad Bunny’s Super Bowl Halftime Show Breaks Language Barriers and Ignites Cultural, Political Debate

Bad Bunny’s headlining performance at the Super Bowl 60 halftime show is drawing national attention, with fans and critics debating…

By – Tyler BrooksDonald Trump Responds as Iranian Protesters Gain Ground in Unprecedented Nationwide Uprising

Iran’s Supreme Leader Ali Khamenei is openly blaming Donald Trump as nationwide protests continue to expand and intensify. And Donald…

By – Jackie AllenSavannah Guthrie’s Mom Missing in Arizona: Search Intensifies as FBI Joins Investigation

The desperate search for Savannah Guthrie’s mom continues in Arizona, with authorities entering the fourth day of a widening investigation…

By – Jackie AllenFlaming Star Nebula: A Runaway Star Sets the Cosmos Aglow

Astrophotographer Greg Meyer has unveiled a breathtaking portrait of the Flaming Star Nebula, where the brilliant blue star AE Aurigae…

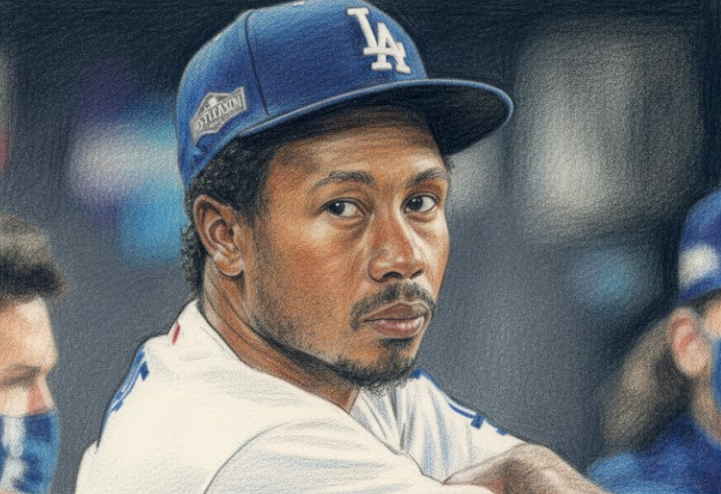

By – Jackie AllenTerrance Gore, Former MLB Star and Three-Time World Series Champion, Passes Away at 34

Terrance Gore, a former Major League Baseball outfielder celebrated for his blazing speed and clutch base running, has died at…

By – Tyler BrooksNo posts found.

No posts found.

No comments yet. Be the first to comment!

No comments yet. Be the first to comment!