Non-Hallucinated Facts

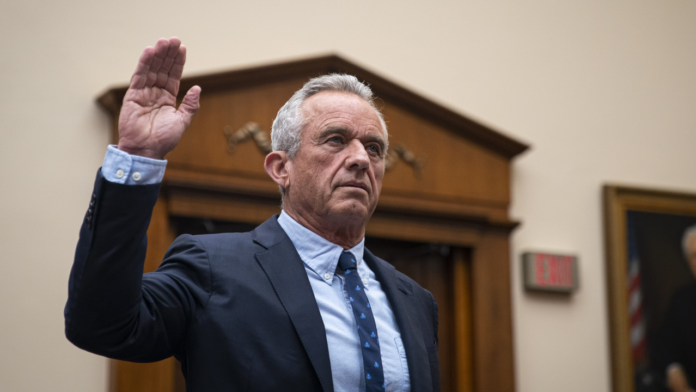

- RFK Jr.’s MAHA policy report is facing intense scrutiny today amid widespread reports that AI-generated citations, potentially from ChatGPT, have infiltrated its scientific claims.

- The legal profession continues to grapple with the pervasive issue of AI-hallucinated legal citations in court filings, leading to sanctions and disciplinary actions against attorneys.

- Despite known risks, lawyers and litigants persist in leveraging AI for legal research, underscoring a critical need for enhanced human oversight and robust validation protocols.

By Samuel Lopez – USA Herald

NEW YORK, NY – May 30, 2025 – A seismic tremor is rippling through both political and legal landscapes today as concerns about artificial intelligence “hallucinations” escalate from isolated incidents to a pervasive threat to informational integrity. Mainstream news outlets are abuzz with reports that a pivotal policy document, the MAHA report, attributed to RFK Jr., allegedly contains scientific citations generated by AI, specifically ChatGPT, raising questions about its veracity. This comes as the USA Herald has continuously chronicled the alarming trend of AI-fabricated legal citations making their way into high-profile court cases, leading to a cascade of professional repercussions for attorneys.

Sharp-eyed netizens, acting as an informal but highly effective oversight committee, reportedly flagged the suspicious citations within the MAHA report. Their findings, which quickly gained traction across social media and news platforms, have been met with swift condemnation from experts who have decried the alleged use of AI in this context as “sloppy” and “shoddy.” The incident highlights a growing chasm between the promise of AI-driven efficiency and the critical imperative for human diligence and accuracy, a tension that is particularly acute in fields where factual precision is paramount.

The MAHA report’s alleged AI-generated content serves as a stark, public-facing example of a problem that has been quietly festering within the legal industry for some time. Just last week, the USA Herald brought to light a troubling instance where a California judge sanctioned attorneys for submitting a legal brief riddled with fabricated citations and quotes, all courtesy of AI tools. The judge’s reaction was a chilling testament to the potential for procedural chaos: “I read their brief, was persuaded (or at least intrigued) by the authorities that they cited, and looked up the decisions to learn more about them – only to find that they didn’t exist. That’s scary. It almost led to the scarier outcome (from my perspective) of including those bogus materials in a judicial order.”

This incident is far from an isolated anomaly. The USA Herald has extensively reported on the high number of high-profile cases in recent months that have been implicated in the use of AI-hallucinated legal citations. Attorneys have faced severe sanctions, ranging from financial penalties to referrals to oversight committees for professional discipline. Despite these escalating risks, a curious and concerning pattern persists: lawyers, law firms, and even self-represented litigants continue to embrace and deploy AI for legal research and document generation, often without the necessary safeguards. This paradoxical reliance underscores a broader challenge—the magnetic pull of efficiency offered by AI, even when juxtaposed against the precipitous cliff of professional liability.

In a legal industry increasingly shaped by AI, the rise of “AI hallucinations”—fake legal citations generated by large language models—has made accuracy and citation integrity a top concern. Our thorough analysis at the USA Herald reveals that while the threat is real, it is far from inevitable, and the answer to the critical question—“Can you trust your legal AI to get it right?“—is, paradoxically, yes, but with crucial caveats and robust human intervention.

Top-tier firms, acutely aware of the pitfalls, are implementing multi-layered strategies to mitigate these risks. This proactive approach underscores a fundamental principle: AI tools are powerful assistants, but they are not, and cannot be, autonomous legal authorities.